Published in Fall 2025

Learning analytics is an increasingly important topic in organizations looking to retain employees, improve knowledge sharing and remain competitive in the market. But just talking about learning analytics isn’t enough. Measuring whether learning is actually successful comes with many unexpected hurdles — especially when organizational leaders want learning justification but don’t understand why the learning function needs access to broader departmental data. Luckily, the Kirkpatrick Evaluation Model, specifically levels three and four, offer learning leaders a clear path through these challenges.

The Kirkpatrick Evaluation Model

While there are many models for evaluating learning, none is more famous than the Kirkpatrick Model. The first two of the four levels in the model are common in learning and development (L&D) — surveys and assessments. Surveys ask the participants to reflect and share their reactions to the learning experience. Assessments demand a bit more from the participants in the form of recalling information they learned from the training on a graded rubric, providing insights into the knowledge gained. These are two critical tools in evaluating training — but relying on them as the primary justification for the learning program is a mistake.

Surveys and assessments simply do not reflect the interests of the organization at large. Instead, the first two levels of the Kirkpatrick Model speak only to the learning functions that create and host the training. For example, facilitators can gain awareness around the efficacy of the training and content presented in levels one and two, but a knowledge-check style assessment cannot discern whether the training had an impact on the organization. Presenting this data as justification for learning programs creates division in organizational partnerships and expectations, so it is best to keep these metrics at the learning level.

To elevate measurement and highlight the value of learning programs, L&D leaders must refine their analytics approach to focus on levels three and four in the Kirkpatrick Model. These levels analyze the behaviors changed and organizational outcomes after the training has concluded. Achieving these measurements requires collaborative, organization-wide partnerships, investments in reporting technologies and, oftentimes, vulnerability from the learning function. However, these become easier as the organization shifts to a learning-centric and analytical approach for solving deep-rooted issues.

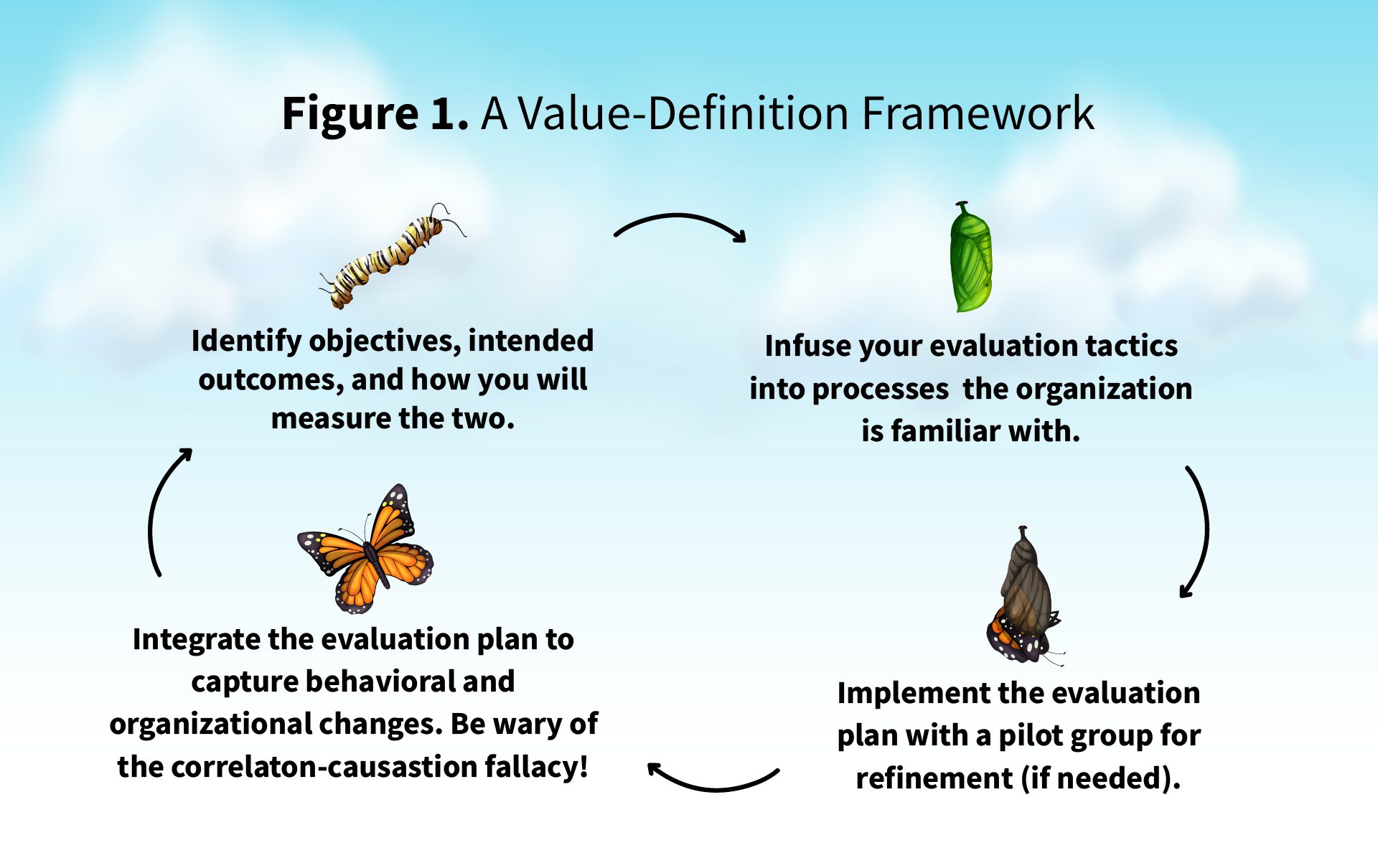

A Value-Definition Framework

This article will present a four-step approach for getting to levels three and four of the Kirkpatrick Model, tying traditional learning analysis systems with fundamental project management disciplines (see Figure 1). In doing so, learning functions become champions within the organization and earn long-sought trust with leaders.

Phase 1: Identify

Morris Mandel famously said, “Putting your best foot forward at least keeps it out of your mouth.” This is the right mindset to have when designing learning with levels three and four in mind. To serve an organization’s learning needs, you must first understand what is needed and why. This is aptly named a needs assessment or root cause analysis, and it provides the necessary framework to build an advanced learning analytics strategy.

Let’s use a hypothetical example: When a request from the business comes in, the learning team’s first step should be to break up the request into various sections and analyze them independently. Here’s how that could look:

Request: An operations team leader requests training to prepare their team for a system update occurring next month.

Questions to ask:

- “Training” What does the ideal training look like? How does the department currently train on new systems and how will this training need to be modified? Which modalities can deliver the highest impact for the learners?

- “System update” Will a test environment be available before the launch of the update? How will this update change current role functions or expectations?

- “Next month” Do these updates occur on a regular basis or is this a one-off update? How early does the training need to be available before the update occurs? Who else (IT, information security, etc.) needs to be involved in the creation of the training?

Importantly, the final question should always be: What will the success of this training look like to you?

This series of questions, which are general enough to apply to many types of requests, elevates the traditional partnership with organizational stakeholders in two substantial ways. First, it does not prompt or push the requestor to a specific solution; instead, the questions are open-ended and encourage building conclusions as a team. This keeps learning professionals from being seen as order-takers and encourages dynamic conversations and debates about different learning modalities.

The second and more measurable impact of root cause analysis is through the final question. Asking stakeholders what success looks like to them will almost always set the stage for deeper analysis of the training’s impact. In the system update example, the stakeholder might say that success of the training looks like reduced customer service times and higher customer satisfaction scores — two common intended outcomes that, despite their vagueness, offer an open door in which learning professionals can put their best foot forward. This can be done by adding numeric goals to the outcomes when possible.

In the case of the dreaded response, “I don’t know, I was just told we need it,” review the sets of questions asked and drill down further into the learning objective. Every learning project needs an objective and expected outcome; the former acts as the guiding light while the expected outcome acts as the anchor to keep the solution within scope and feasibility. Once these are defined, write them out as statements:

- Objective: The training for the upcoming system upgrade must prepare users for the new layout and tools available and offer the ability for hands-on practice.

- Expected outcomes: This training will support a reduced time to serve customer needs in the new system while increasing customer satisfaction.

With the project goals (not actual solutions) identified, the learning professional can transition into phase two of the value-definition framework.

Phase 2: Infuse

The second phases focuses on infusing the learning analytics plan into familiar organizational processes. A common mistake learning professionals make is assuming they have stakeholder buy-in — believing everyone not only understands what the evaluation plan is but also how it will impact the working relationship. This goes back again to Morris Mandel’s wise words: Put your best foot forward now so it doesn’t end up in your mouth later. By infusing the learning evaluation plan in processes that already work, you can give stakeholders the opportunity to experience and interact with it before it impacts the success of the training initiative.

Let’s go back to the example of the training requested for a system update “next month.” On this timeline, learning professionals should not only build the new training but also infuse their evaluation plan into the current system utilization. This might include:

- Finding and tracking the current state of metrics identified in phase one (in this example, time spent serving customer needs and customer satisfaction)

- Observing the system being used in real-world situations to better understand how this training should be shaped

- Meeting with impacted users (formally or informally) to understand the current methods of training around this system and what opportunities they have previously identified

The infusion phase is essential for building organizational partnerships that last long after the initial training need is satisfied. It defines the way that the learning team works with stakeholders, not for them, and creates a cohesive launch for phases three and four of the framework.

Phase 3: Implement

The implementation phase is everyone’s favorite. The hard work is over, and it is time to reap the benefits of the training initiative — in theory. In reality, rushed implementations can backfire, leading to the opposite of what was intended. This phase needs to be just as thoughtful and refined as the others to ensure you get the outcomes you are aiming for.

One way to structure the implementation is with a pilot group, a small group of users who receive access to the new learning ahead of time, enabling the learning team to refine the offering before a wider-scale launch. This is not necessary in every case, but for the right projects it can be a critical learning experience for the creators of the training to test its efficacy and impact on the target audience. However, this only works if the learning professional knows what they are measuring between the pilot and constant groups. Having a pilot group just for the sake of having one is not a valuable use of time for the users, stakeholders or learning professionals.

In the system update example, a pilot group would likely not work; the timeline is very short, and the system will likely update for all users at the same time, meaning everyone must be upskilled on the changes. To identify the need for a pilot group, refine the needs assessment in phase one to include questions on impacted users, scale of changes being made and timeline of change implementation. However, try not to change the objectives and expected outcomes after they are agreed upon — this can prevent the evaluation plan from continuing onto phase four.

Phase 4: Integrate

With the first three phases complete, the training initiative launched and stakeholders (ideally) happy, learning professionals might default to closing the chapter and beginning on the next training request in their queue. However, doing this misses a key opportunity to establish lasting partnerships — and breaks the commitment made in phase one to measure the success of the training. Integrating the evaluation plan allows learning leaders to capture rich data on behavioral change (Kirkpatrick Level 3) and organizational outcomes (Kirkpatrick Level 4) from the people who went through the training.

In our system update example, the learning professional must continue to work with stakeholders to acquire the data on customer service time and satisfaction scores. This should be a straightforward request because of the work done in phase one to identify expected outcomes and in phase two to infuse metrics into the partnership.

Elevating to phase four means creating reports that go beyond metrics to tell a compelling story from data in a relatable, accessible and actionable way. In the example, the learning professional could build a report that shows the decreasing customer service handle times for the operations department and the increasing customer satisfaction scores with direct quotes. This highlights the learning value and fosters a future partnership with stakeholders that is based on collaboration, mutual respect and concrete outcomes.

However, I’d caution against concluding that the training was a success just because the organization hit its goals. In the case of the system update, it could be the changes made to the system that impacted the service times, regardless of the training. To prevent stakeholders from identifying this for themselves, avoid the false cause fallacy — highlight how the training was effective along with other influences to support the expected outcome. This increases the validity of the learning initiative and positions the learning team as a partner to success.

Getting Started

To put the value-definition framework into your own learning projects, remember the following:

- Stop looking at only surveys and assessments as a means of justifying the investments into learning. Instead, start finding what is genuinely needed from the organization by building partnerships, rapport and learning advocates.

- Identify the objectives and expected outcomes as a first step, before any solutions are created, and understand the relevant behavioral and organizational metrics to capture.

- Infuse this higher-level approach of evaluation into existing processes within the organization to highlight how it will work for the new initiative.

- Network with other L&D professionals to share experiences and learn new technologies and techniques.

With this approach to measuring the value of organizational learning, your organization will view L&D as a partner in success.